What is Apache Airflow?

Airflow is an open source orchestration tool to programmatically author, schedule and monitor workflows of data pipelines. It allows you to automate ETL tasks for data engineering and build workflows with complex dependency.

|

| Source: https://airflow.apache.org/docs/apache-airflow/2.0.1/concepts.html |

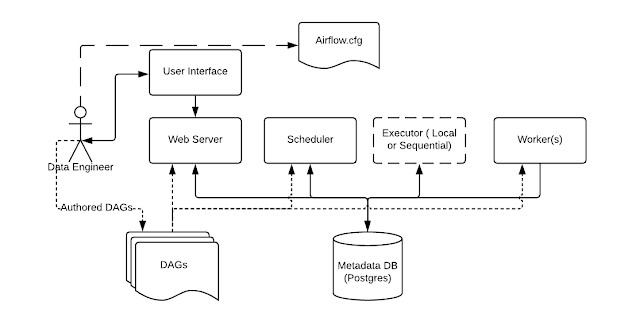

Airflow mainly consists of the following components:

- Web Server: Web server is the UI that can be used to get an overview of the overall health of different Directed Acyclic Graphs (DAG) and also to visualize different components and states of each DAG. You can also manage users, roles, and different configurations for the Airflow setup on the web server.

- Scheduler: Scheduler orchestrates various DAGs and their tasks, taking care of their interdependencies, limiting the number of runs of each DAG so that one DAG doesn’t overwhelm the entire system, and making it easy for users to schedule and run DAGs on Airflow.

- Executor: The executors are the components that actually execute tasks. There are various types of executors that come with Airflow, such as SequentialExecutor, LocalExecutor, CeleryExecutor, and the KubernetesExecutor. People usually select the executor that suits their use case best. We will cover the details later in this blog.

- Metadata Database: The database stores metadata about DAGs, their runs, and other Airflow configurations like users, roles, and connections. The Web Server shows the DAGs’ states and their runs from the database. The Scheduler also updates this information in this metadata database.

- Worker(s): Workers are separate process which also interact with the other components of the Airflow and the metadata repository.

- airflow.cfg: This file contains airflow configuration accessed by the web server, scheduler, and workers.

- DAGs: DAG is a workflow where you can specify the dependencies between Tasks, the order in which to execute the tasks, and retries on failure, etc. DAG files contain Python code, and the location of these file is specified in airflow.cfg. They need to be accessible by the web server, scheduler, and workers.

So what can we do with these components?

You can author workflows as Directed Acyclic Graphs (DAGs) of tasks using airflow. The Airflow scheduler executes your tasks on an array of workers while following the specified dependencies. Rich command line utilities make performing complex surgeries on DAGs a snap. The rich user interface makes it easy to visualize pipelines running in production, monitor progress, and troubleshoot issues when needed.

When workflows are defined as code, they become more maintainable, versionable, testable, and collaborative.

The main features of airflow are:

- programmable

All workflows are scripted in Python, which makes it more maintainable, versionable, testable, and collaborative. - supports ETL best practices

Time travelling to catch up the data as of past date, running transformations in docker containers, extending schedules by running cloud-native services are all possible. - distributed

It can run in a distributed mode to keep up with the scale of data pipelines. - observable

You can easily monitor the workflows using web server dashboards, notifications, etc.

Quickstart: Running Airflow Locally

1. Set environment variable.

By default, DAGs, configuration file, log folder are located in AIFLOW_HOME.

export AIRFLOW_HOME=~/airflow2. Install airflow

pip install apache-airflow

3. Initialize the meta database

airflow db init

4. Add admin

airflow users create \

--role Admin \

--username <username> \ --firstname <firstname> \

--lastname <lastname> \

--email <email> \

--password <password>

5. Start the web server

airflow webserver --port 8080

Go to http://localhost:8080 on the browser and check if the webserver is running

6. Start the scheduler

airflow scheduler

7. Create a test DAG

from datetime import datetime, timedelta from airflow.models import DAG from airflow.operators.bash import BashOperator from airflow.operators.dummy import DummyOperator dag = DAG(dag_id='my_dag', start_date=datetime(2022, 1, 1, tz="UTC"), schedule_interval=timedelta(minutes=15), catchup=False) start = DummyOperator(task_id='dummy_task', dag=dag) final = BashOperator(task_id='hello', bash_command='echo hello', dag=dag) start >> final

8. Test the DAG, check the status on the web server

Comments

Post a Comment